Large Language Models for Recommender Systems IV - Reasoning

Can GRPO-induced reasoning improve recommendation performance?

Introduction

Large Language Models (LLMs) can potentially redefine recommender systems by transcending traditional collaborative filtering and matrix factorization approaches. Unlike these traditional methods, which rely on implicit user-item interaction patterns, LLMs process natural language context (e.g., movie plots, user reviews, or metadata) to infer preferences. For example, an LLM might deduce that a user who enjoyed Inception and Memento appreciates nonlinear storytelling, even if those films were never explicitly linked in training data. This capability enables LLMs to generate reasoned recommendations, offering explanations like "Recommended for fans of mind-bending narratives"—a feature critical for user trust and engagement.

Past Work

In prior work, I explored fine-tuning smaller LLMs on labeled user engagement data (e.g., liked/disliked movies) and synthetic reasoning generated by frontier models like GPT-4o. While these approaches improved accuracy, they operated in a supervised fine-tuning (SFT) paradigm. It was observed that the models can benefit significantly from curated reasonings, yielding high accuracy in predicting whether users will like or dislike a movie. Given the moment that ‘reasoning’ is having in the discourse around LLMs, and the gains in using synthetic reasoning traces, an immediate next question pops up - how to build and enhance reasoning in small LLM-based recommenders.

Reasoning in LLMs

Reasoning in large language models refers to their ability to process information through structured, logical steps—akin to human problem-solving—rather than relying solely on pattern recognition or memorization. This involves:

Decomposing Complex Tasks: Breaking down a problem into intermediate steps (e.g., solving a math problem by first identifying variables, recalling the concepts involved, then conducting calculations).

Drawing Inferences: Synthesizing implicit connections between concepts (e.g., recognizing that “If all mammals breathe air and whales are mammals, whales must breathe air”).

Generating Explanations: Articulating the why behind a prediction or answer.

Techniques to Cultivate Reasoning in LLMs

Chain-of-Thought (CoT) prompting nudges the model to generate intermediate reasoning steps before producing a final answer. This can be simple e.g. adding ‘Think step by step’ to the prompt.

Synthetic Reasoning Data: Frontier LLMs like GPT-4 or Claude 3 can be used to generate synthetic reasoning traces to train smaller models. This is something I explored in a prior work. A bottleneck in this approach is that one needs to collect large amount of reasoning data.

Pure Reinforcement Learning: DeepSeek’s research on R1-Zero demonstrated that reasoning can emerge as a learned behavior through pure reinforcement learning (RL), without human supervision or predefined instructions. This opens up avenues to train reasoner models without requiring large swathes of reasoning data, a particular interesting paradigm of small reasoning model emerges.

GRPO

The DeepSeek team developed Group Relative Policy Optimization (GRPO) to drive the reinforcement process. This method efficiently optimizes responses without requiring a value function, unlike Proximal Policy Optimization (PPO). Instead, the learning process was guided by well-defined reward functions—such as granting higher scores for correct answers and applying small penalties for formatting errors—encouraging the model to refine its reasoning.

It works as (from Unsloth blog):

The model generates groups of responses.

Each response is scored based on correctness or another metric created by some set reward function rather than an LLM reward model.

The average score of the group is computed.

Each response's score is compared to the group average.

The model is reinforced to favor higher-scoring responses.

The success of DeepSeek’s work suggests that pure RL can be a viable path to developing reasoning capabilities in language models. I intend to explore this recipe to build a RecSys reasoner model in this work.

Current Work

This work advances the series on ‘LLMs for RecSys’ by investigating pure reinforcement learning (RL)—specifically, the GRPO algorithm and DeepSeek R1-Zero recipe—to train LLMs to generate not just like/dislike predictions for the target movies, but also reasoning traces based on the past interactions.

GRPO for RecSys

Recall our set up - given a user’s last 10 engaged movies, predict whether the user will like or dislike a target movie. I showed in prior work that reasoning provides a useful signal that can boost performance. Rather than SFT on reasoning data, in this work we use RL to induce reasoning into the model. The starting point is a small LLM model, here Qwen 1.5B Instruct. Note that we are starting with an Instruct model as opposed to a base model as in the previous studies. This is motivated by the other works on training reasoner models Tiny-Zero, wilccbb, unsloth etc. I followed the efficient implementation of unsloth for this work.

The first step is to construct prompts from the training data. Instructions to add provide answer in a reasoning format are added.

<|im_start|>system

Respond in the following format:

<reasoning>

...

</reasoning>

<answer>

...

</answer>

<|im_end|>

<|im_start|>user

Based on a user's viewing history, predict whether the user will like the target movie or not.

## User History:

- Disliked "Hideaway (1995)"

- Disliked "Stigmata (1999)"

- Disliked "Crush, The (1993)"

- Liked "Schindler's List (1993)"

- Disliked "Henry V (1989)"

- Liked "Once Upon a Time in the West (1969)"

- Disliked "Outlaw Josey Wales, The (1976)"

- Liked "War, The (1994)"

- Disliked "Jumanji (1995)"

- Liked "Fargo (1996)"

## Target movie:

"Stuart Saves His Family (1995)"

<|im_end|>

<|im_start|>assistant

I leveraged the correctness and format reward functions developed by willcbb. Please checkout the notebook for more details. As this requires substantial compute and time, I only trained for 2500 steps (10K data samples) which ran for over 12 hours on a single A100 GPU on Colab. This is the same 10K samples that I had used to train the SFT-based reasoning models in the previous study.

Reinforcement at Play

It is fascinating to see the model response evolve as it tries to optimise for reward. Pre-GRPO at Step 0, the response looked like this:

Response:

Given the user's history of predominantly negative reviews for target movies, it is reasonable to predict that the user is unlikely to like "Hard Rain (1998) either. The user's previous experiences suggest a dislike for mainstream action and adventure films, which the target movie seems to represent. Therefore, based on the user's viewing history, this prediction aligns with their patterns of disfavoring such genres and movies.Somewhere (Step 100) the model starts providing reasoning albeit not in the right format

**Reasoning:**

The user's movement through the target list of movies demonstrates a consistent dislike towards traditional action and adventure films from the 1990s. The pattern of "Disliked" with each entry suggests that this individual does not enjoy films in these genres. Since the user's taste in movies aligns with the target movie's content, it is predicted that the user will not like "Hard Rain (1998)" despite its title suggesting "Hard Days Passage," as the user's past dislikes for this type of film are the most direct predictor of their likely reaction.Around Step 300, an interesting pattern emerge where we get the closing tokens </reasoning> and </answer>.

Response:

Based on the user's history of liking and disliking certain movies, particularly those from the Star Wars and Jurassic Park franchises, as well as the Space Age movies they have seen, there is a possibility that the user may like "Star Trek: Insurrection (1998)" as it falls under the category of science fiction and space adventure. However, it is not guaranteed.

</reasoning>

</answer> The system stays in this local minima, then we get the correct answer format at Step 550, still wrong answer type (boolean expected as per the reward) though

Response:

Based on the user's history, they have liked some action/comedy genres (e.g., "Lethal Weapon 2", "Shanghai Noon", "Total Recall", "Mask of Zorro", "Edge") and disliked other action/comedy genres (e.g., "True Lies", "Big Kahuna", "Lethal Weapon", "Shanghai Noon", "Men in Black"). Therefore, it is likely that the user will also like the target movie "He Got Game (1998)" which is an action comedy film.

</reasoning>

<answer>

Like

</answer>Around step 600, the response has correct answer format. This is Step 620 -

Response:

Given the user's history of liking movies such as "Sixth Sense," "Scream 3," "By the Sea," and "Taking of Pelham One Two Three," it is likely that the user would enjoy the movie "Talented Mr. Ripley, The (1999)." This type of movie often falls into the suspense thriller genre, which the user has shown interest in recent years. Therefore, the user is highly likely to enjoy the target movie.

</reasoning>

<answer>

Yes

</answer>The model is in this local minima for a long time. Not until step 1800, that we start getting the right format, not necessarily the correct reasoning or the answer.

Response:

<reasoning>

Based on the user's viewing history, it appears that the user generally likes films with themes related to education, social issues, and strong emotional narratives. "Clara's Heart" (1988) is a drama that revolves around the life of a young woman who becomes a teacher, dealing with the challenges of teaching in a difficult environment and the emotional journey of both the protagonist and the students. Given the user's pattern of liking films with such themes, it is likely that they will enjoy "Clara's Heart" (1988).

</reasoning>

<answer>

Yes

</answer>Results

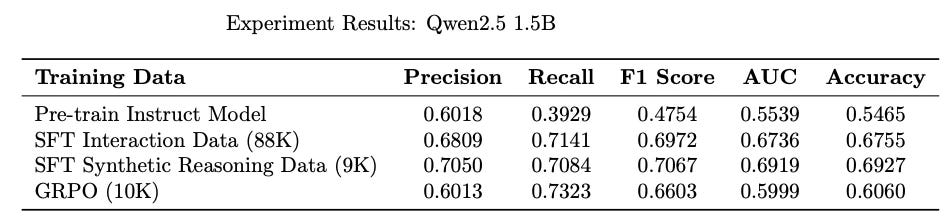

The RL fine-tuned model was evaluated on a held-out real MovieLens test set (same as in the earlier studies), judging them solely on binary label accuracy (like/dislike). Below table shows the comparison with SFT approaches:

Pre-trained Instruct model which is the Qwen2.5-1B-Instruct model used without any additional SFT on user interactions.

SFT on Interaction Data: Base Qwen2.5-1B model with additional SFT on only engagement data i.e. prompts containing past 10 movies and the target movie with only Yes/No responses. The whole dataset containing 88K samples were used.

SFT on Synthetic Reasoning Data: Base Qwen2.5-1B model with additional SFT on 9K samples containing past 10 movies and the target movie with responses including reasoning as well as the Yes/No output.

GRPO: Instruct Qwen2.5-1B-Instruct model with RL fine-tuning using GRPO mechanism. This is the setup of the current work.

Observations:

The GRPO does show an improvement over zero and few-shot setups.

Interestingly SFT on reasoning data performs better than RL-based fine-tuning. It is possible that larger models or longer training runs improve the performance of the GRPO, but SFT on reasoning data remains a formidable baseline.

This has also been shown in other studies s1: Simple test-time scaling, Sky Thought etc.

Model

The fine-tuned model is uploaded to the Hugging Face as januverma/Qwen2.5-1.5B-GRPO from where it can downloaded locally to be used.

from unsloth import is_bfloat16_supported

import torch

max_seq_length = 1024 # Can increase for longer reasoning traces

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "januverma/Qwen2.5-1.5B-GRPO",

max_seq_length = max_seq_length,

load_in_4bit = True, # False for LoRA 16bit

fast_inference = True, # Enable vLLM fast inference

gpu_memory_utilization = 0.5, # Reduce if out of memory

)

SYSTEM_PROMPT = """

Respond in the following format:

<reasoning>

...

</reasoning>

<answer>

...

</answer>

"""

input = '''

Based on a user's viewing history, predict whether the user will like the target movie or not.

## User History:

- Disliked "Hideaway (1995)"

- Disliked "Stigmata (1999)"

- Disliked "Crush, The (1993)"

- Liked "Schindler's List (1993)"

- Disliked "Henry V (1989)"

- Liked "Once Upon a Time in the West (1969)"

- Disliked "Outlaw Josey Wales, The (1976)"

- Liked "War, The (1994)"

- Disliked "Jumanji (1995)"

- Liked "Fargo (1996)"

## Target movie:

"Stuart Saves His Family (1995)"

'''

text = tokenizer.apply_chat_template([

{"role" : "system", "content" : SYSTEM_PROMPT},

{"role" : "user", "content" : input},

], tokenize = False, add_generation_prompt = True)

from vllm import SamplingParams

sampling_params = SamplingParams(

temperature = 0.8,

top_p = 0.95,

max_tokens = 2048,

)

output = model.fast_generate(

text,

sampling_params = sampling_params,

)[0].outputs[0].text

print(output)Conclusion

In this work, I investigated the potential of pure RL to fine-tune a small LLM model into a reasoner model. It appears that the DeepSeek R1-zero recipe of using GRPO with correctness and formatting reward functions works and is able to create the reasoning process without any human guidance. However, it is not obvious whether the reasoning thus generated is meaningful and if it translates to better performance. Via this study, I found that SFT on well-curated reasoning data turns out to a robust baseline. In fact, in this work, we see SFT on reasoning data performing better than RL-based fine-tuning. This is a hotly studied area in the recent times.

I’ll continue to explore this line of work. Please subscribe to this newsletter.

If you find this work useful, I would appreciate if cite it as:

@misc{verma2025llm4recsysIV,

title={Large Language Models for Recommender Systems IV: Reasoning},

author={Janu Verma},

year={2025},

url={\url{https://januverma.substack.com/p/large-language-models-for-recommender-461}},

note={Incomplete Distillation}

}