Joint Retrieval and Recommendation Modeling

Introduction

Search engines and recommendation systems are the backbone of countless modern services, helping users discover exactly what they need, often before they even know they need it.

Search deals with explicit queries: a user types in a query (e.g. "crime thriller set in American South") and the system retrieves a ranked list of items (e.g., movies, shows, videos, etc.) from a corpus that are relevant to the query.

Recommendation relies on implicit user behavior: given a user’s past interactions (e.g. a sequence of movies they watched), the system retrieves a ranked list of items from a corpus that are relevant to the user interests.

In industrial settings, these two applications - information retrieval and recommender systems - operate on separate tracks, each with its own models, featurization, and training setups. The main reason for this arrangement is that their inputs differ: one is an explicit textual context (the search query), while the other is a user context (user history, preferences, or an ongoing session). Consequently, the early solutions also differed: search engines used text vectorization techniques like tf-idf, while recommender systems used collaborative filtering or matrix factorization. A consequence of this distinction is that the teams often end up maintaining completely bespoke models for each use case (e.g. one model for search, another for “more like this” recommendations, yet another for homepage personalization). This leads to high maintenance costs and technical debt with multiple pipelines to build and tune.

Joint Modeling

Joint modeling offers an appealing alternative: treat search and recommendation as two sides of the same coin. At a fundamental level, both are about connecting users with relevant content from a corpus. Both can be framed as a top-K retrieval problem from the same item corpus, differing mainly in the source of context (query vs. user profile). By unifying them, we aim to reduce system complexity (one model instead of many) and unlock transfer learning opportunities (signals from one task improving the other). In other words, insights gained from how users interact with content (recommendation data) could help answer search queries better, and vice versa.

In this post, we’ll explore cases of joint modeling of search and recommendation tasks. We will discuss why this unification makes sense, highlight real examples from Netflix and Spotify, and delve into research findings on the benefits of joint training.

Joint Modeling in Dense Setup

Modern search and recommendation systems increasingly use dense vector representations to match users with items. The typical architecture is a bi-encoder (also known as a two-tower model).

In search (bi-encoder): A neural network (tower) encodes the user’s query into a fixed-length vector. Another network (same architecture or possibly the same network) encodes each item (e.g. a document, song, or video) into a vector in the same embedding space. The system pre-computes and stores embeddings for all items in the corpus. In response to a query, it computes the query’s embedding and then finds the items whose vectors are most similar (e.g. via cosine similarity ) to the query vector.

In recommendation (two-tower): The setup is analogous, but instead of a textual query, the first tower encodes the user context into an embedding. This context can be the user’s ID, their past interaction history, or some representation of their preferences. The second tower encodes items into the same vector space. By finding the nearest item vectors to the user context vector, the system retrieves the top-K recommendations for that user.

The retrieval can be efficiently done with approximate nearest neighbor search.

The key idea is that both queries and users are just different types of “contexts” that map into a common vector space where items also live. A well-trained embedding model will place an item close to a query if the item is relevant to that query; similarly, it will place an item close to a user’s vector if that item suits the user’s tastes (given their history).

Joint modeling in a dense setup means we use a single model (or closely linked models) to handle both tasks. Instead of training separate bi-encoders for search and for recommendation, we train one unified architecture that can intake either a query or a user profile and produce an embedding to be matched with item embeddings. There are a few ways to achieve this:

Shared embedding space: We might use the same item encoder for both tasks (so item representations are universal), and have two different encoders for queries and users. During training, we alternate between search data and recommendation data, so that the item encoder and the context encoders all learn together. This way, the item representation is informed by both how it’s described in text (search context) and who interacts with it (user context).

Single context encoder with task indicator: An even bolder approach is to have one unified context encoder that takes an input which could be “query text” or “user history”, along with a flag or token that indicates the task type. For example, a transformer-based encoder might accept an input like “[TASK=SEARCH] workout music” or “[TASK=RECOMMEND] User42’s history…”. The model internals can learn to handle these appropriately.

What are the benefits?

A unified dense model reduces duplication. For instance, if a particular movie is very popular in the recommendation system, that popularity signal can inform the search system to possibly rank it higher even if the query evidence is sparse. Conversely, if the search model has learned a lot about how to interpret text queries (e.g. that "thriller with spy" relates to certain movies), those learned item relationships can help the recommendation side by improving item embeddings (e.g. placing spy thrillers together in the vector space). This cross-pollination can yield better overall relevance.

Maintaining one system is easier than maintaining two. Things like feature engineering, representation learning, and model updates can be done in one codebase. In real-world teams, this means less technical debt and faster iteration. It also means the same infrastructure (indexes, databases, deployment pipelines) serves both, simplifying the stack.

Netflix UniCoRn

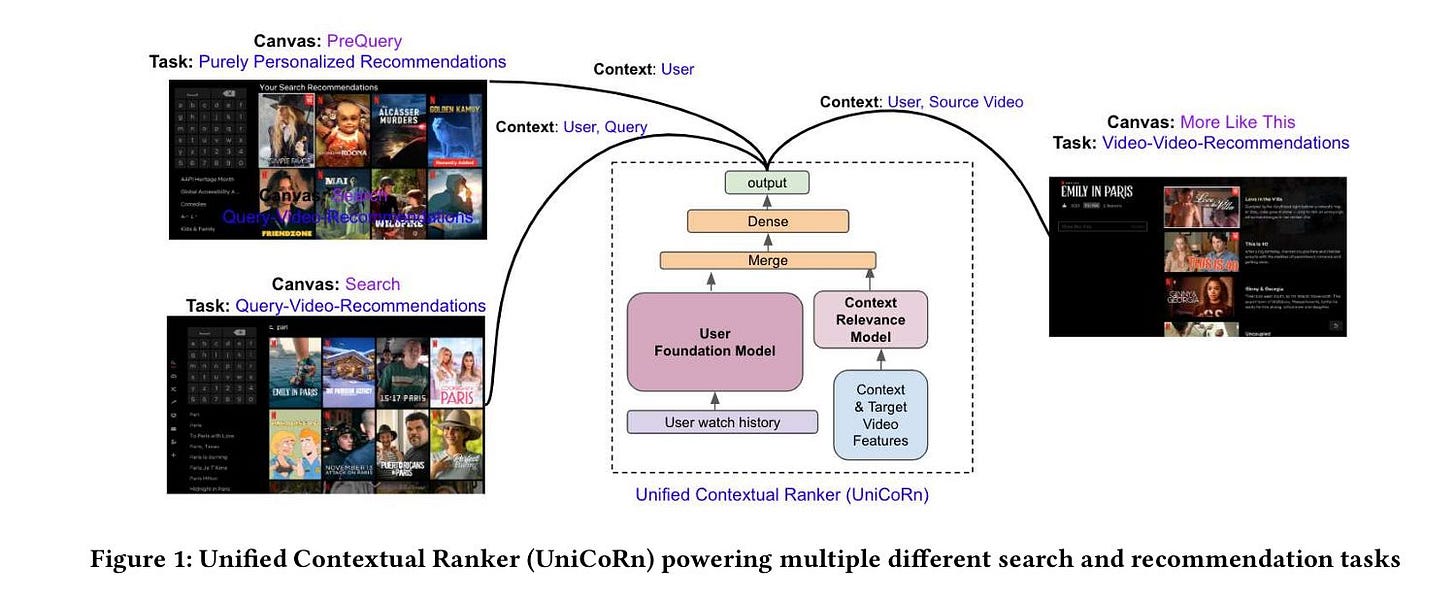

A concrete example of joint dense modeling in action is Netflix’s Unified Contextual Ranker (UniCoRn). As one can imagine, Netflix has multiple recommendation and search surfaces:

search bar where users type queries

homepage rows of recommendations

“more like this” suggestions on a title

Historically, they had separate algorithms for each. The UniCoRn project aimed to unify these with one deep learning model. Their approach is create a shared broader context for all the tasks which is comprised of user id, text query, country, source entity id, task. The other input (tower) of the model is the target entity id, and the output of the model is the probability of positive engagement i.e.

Not all components of the shared broader context are available for all the tasks. UniCoRn uses the following imputation strategy

For search task, use Null value for the missing source entity id.

For recommendation task, use the tokens of the display name of the item as query.

Note that one can also leverage large language models (LLMs) to impute missing context e.g. synthetic query generation has seen traction recently.

They trained this model on a combined dataset of user engagement events from both search sessions and recommendation impressions. Item and context features were fed through shared networks to produce a ranking score.

Key takeaways from Netflix’s case:

Single model, multiple tasks: UniCoRn could rank results for a typed query and also do item-to-item recommendation, by just populating the input fields differently.

Task awareness: Including a “task type” input helped the model learn that, say, the importance of certain features differs between search and recommendation. The model could balance trade-offs (for example, diversity vs. precision) depending on task.

Feature sharing: They found that some features generalize well. For instance, a representation of a movie (embedding from its metadata) is useful whether someone is searching for it or it’s being recommended. By sharing those representations, the model doesn’t have to learn them twice.

Imputing missing data: By cleverly substituting missing pieces (like using item display names as pseudo-queries in a recommendation scenario), they ensured the model saw a more complete feature space and could discover correlations across tasks (e.g. if a query text happens to match an item that was also recommended organically).

The dense joint model approach has proven quite effective in practice. It essentially treats “query vs. user” as just input variations. There is an intuition here: the model becomes more data-rich. It can learn from two types of signals. If one type of signal is sparse (maybe few search queries for a niche item), the other might be plentiful (the item appears in many user’s watch histories), and the combined model can still learn a good representation for that item.

Joint Modeling in Generative Setup

Embedding-based retrieval is powerful, but it still relies on constructing and searching through a vector index of items. Recently, with the rise of large language models (LLMs), alternative paradigms have emerged: generative search and generative recommendation. Instead of retrieving items via nearest-neighbor search in an embedding space, a generative model simply outputs the identifier of an item directly as text.

Let’s use a simplified analogy to understand how this might work. Imagine each item in our collection (each movie, song, product, etc.) has a unique Id, like “item_12345”. In a generative approach, we incorporate these Id tokens into the vocabulary of a language model. The model is trained on sequences comprising both text and item ids, and it learns to generate the next token in the sequence which might be an item Id.

that (represent either search or recommendation interactions)

Generative search: Suppose the user’s query is “crime thriller set in American South”. We feed this query into a language model and ask it to continue the sequence with a relevant item. The model might output a token

<item_42>, which corresponds to a movie in our catalog. Essentially, the model is saying - in response to the query ‘crime thriller set in American South’, item_42 is a likely relevant result. Under the hood, the model learned this by being trained on many query-to-item pairs, where the item token is treated as the thing to predict given the query text context.Generative recommendation: Consider a user’s listening history: item_15 → item_37 → item_9 (perhaps these IDs correspond to three movies watched in sequence). We prompt the model with this sequence of item Ids and ask it to predict what comes next. It might output

<item_20>, suggesting that item_20 (another movie) would logically follow given the watching pattern. This works because the model was trained on lots of sequences of item interactions from many users, learning a kind of “language model” over item sequences (much like how a word-based language model predicts the next word in a sentence). I have written about generative recommenders in a previous post.

Crucially, the same model can do both of these tasks. We can train the language model on a combined dataset that includes “query -> item” pairs and “sequence of items -> next item” sequences. In training, some examples look like [Query tokens] -> [Relevant Item Id] and others look like [History item Ids] -> [Next Item Id]. The model’s job is to predict item Ids in both contexts. By doing so, it develops a unified understanding of how items relate to either text descriptions (queries) or other items (sequential usage).

This generative joint model is essentially performing multi-task learning: language modeling over mixed types of sequences. At inference time, we just format the input appropriately:

For search scenario, input the query text tokens and ask for an item token output.

For recommendation scenario, input the user’s recent item tokens and ask for an item token output.

What are the benefits of the generative approach?

Simplicity of architecture. We have one model (usually a transformer-based LM) that does everything. There’s no separate retrieval index or complex ranking pipeline; the model “knows” the space of items and can pick one (or a few) to recommend.

Model can potentially capture more complex patterns. For example, it could learn to generate a series of items, not just one, or incorporate rich context in a flexible way (LLMs are very good at ingesting arbitrary context sequences).

Training is end-to-end. Since the model directly outputs item Ids, one can optimize directly for recommending the correct item (using cross-entropy loss on the item token). In contrast, a traditional recommender might have multiple stages (score all candidates, then re-rank, etc.) which each require separate training objectives.

However, generative retrieval is a newer idea and comes with challenges.

Model’s output space (the vocabulary) includes potentially hundreds of thousands of item tokens. This can strain the model’s capacity and make training slower (each prediction is choosing among many possible tokens).

Another challenge is evaluation: you typically want the top-K results, not just one, so you either sample multiple outputs or use beam search, which can be tricky to tune for diversity and relevance.

Spotify Research

A team at Spotify conducted empirical studies showing clear gains from joint modeling:

Simulated Experiments: In controlled simulations, researchers manipulated how much overlap there is between search and recommendation data (e.g., how often the same item appears in both). They found that when there is even moderate overlap or correlation, a joint model significantly outperforms separate models. For instance, when 50% of the “concepts” (item clusters) were shared between tasks, the jointly trained model had much higher recall in retrieval compared to a search-only model. This aligns with the idea that the model is benefiting from complementary information.

Real-world Datasets: Joint modeling has been tested on real combined datasets:

Movie domain: Using MovieLens for recommendations and a film-tag or plot keyword dataset for search queries. Here, a joint model was trained to both recommend movies (predict ratings) and retrieve movies by content tags.

Music domain: Using the Million Playlist Dataset (user-created Spotify playlists) for recommendation, and search queries from a music catalog for search. Playlist titles served as pseudo-queries too.

Podcast domain: Using actual search queries from Spotify’s podcast search and user listening sessions as recommendation sequences.

Across these, the joint generative model consistently outperformed single-task models:

In search tasks, the unified model improved recall@30 by about 11–16% compared to a search-only generative model.

In recommendation tasks, the unified model improved metrics (like recall or NDCG) by 3–24% compared to a recommendation-only model.

These are sizable gains, considering how mature baseline systems already are. An improvement of even a few percent in recommendation can mean a significantly better user experience (more relevant suggestions), and double-digit improvements in search for head queries is impressive.

It’s worth noting that the largest boosts were often on “head” queries or popular items – meaning the joint model particularly helped on things that both tasks deal with, but it also did better on “tail” content by having more data to learn from overall.

Why Joint Generative Training Helps

Spotify researchers have analyzed why a jointly-trained model (serving both search and rec) might outperform two specialized models. Two main hypotheses have emerged:

Hypothesis 1: Popularity Regularization.

Different systems might give the model conflicting signals about how “popular” or generally relevant an item is. For example, imagine an item (say, a niche indie song) appears in very few search queries (perhaps because not many people know to search for it), but it appears in many users’ playlists (indicating it’s quite liked and popular among those who discovered it). A search-only model, learning from query logs, might underestimate this item’s importance, rarely ranking it highly because it seldom saw it in training. A recommendation-only model, however, might learn that this item is very well-liked (lots of people who heard it gave positive feedback). In a joint model, the recommendation data acts as a regularizer on popularity: it can adjust the model’s tendencies so that even in search scenarios the model is more likely to surface item that are truly popular or high-quality, not just those that are frequently queried. In effect, the model’s notion of an item’s overall appeal is more accurate because it’s informed by both sources.Hypothesis 2: Latent Representation Enrichment.

The second hypothesis is about the embedding or representation of items and queries learned by the model. In a search model, items that often co-occur in queries (or share query terms) will end up with similar representations. In a recommendation model, items that are co-consumed by users will have similar representations. These are related but not identical signals. Joint training can enrich item representations by combining both semantic similarity (from search) and usage similarity (from recommendations). For instance, consider two movies, Movie A and Movie B. They might not often be mentioned together in queries (so a search model might not particularly link them), but if many users who watch A also watch B, a recommendation model will embed them close together. If a query is relevant to Movie A, a search-only model might not rank Movie B highly, but a joint model, which knows A and B are closely related in the latent space thanks to user data, might correctly bring up Movie B as well. In short, joint training creates a more connected graph among items: if recommendation data says items X and Y are related, and search data says Y and Z are related, the joint model can indirectly learn that X, Y, Z form a cluster of related content. This can improve recall and the ability to handle tail cases (where direct query evidence is missing, but the model can fill the gap via learned associations).

Analysis of Model Behavior: To test these hypotheses (popularity vs latent factors), researchers examined how the joint model’s predictions differ from the single-task models:

They found that the overall popularity distribution of recommended items didn’t change dramatically with joint training (only about a 1% shift on average). This suggests the model didn’t simply start recommending all popular stuff everywhere (Hypotheses 1 had a relatively small effect).

However, they observed a huge increase (over +200% in one analysis) in the diversity of item co-occurrences in the recommendations. In other words, the joint model was much more likely to recommend pairs of items that didn’t normally appear together in the single-task model outputs but did have a connection through user behavior. This is evidence for H2: the model’s item embeddings were significantly reshaped by the presence of both signals, capturing relations that were missed before.

In a qualitative sense, the joint model “fills in the gaps.” If a search model knew items A and B separately, and a rec model knew B and C, the joint model ends up understanding A-B-C all together. That leads to recommendations that might be novel (surfacing item C for a query about A, even if C was never directly searched, because C is automatically associated through B).

Conclusion and Recommendations

The trend toward joint modeling of search and recommendation is part of a broader evolution in AI: moving away from siloed task-specific models towards more general, multi-task systems that leverage all the data and signals available. By viewing search and recommendation as two sides of a coin, we can build systems that are both more powerful and more maintainable.

Key takeaways:

Search and recommendation can be unified by recognizing they both rank items given a context. The primary difference is whether the context is an explicit query or implicit user data.

Joint models (in both dense and generative forms) have demonstrated improved performance: better retrieval accuracy and more personalized, relevant results, especially by capturing relationships that one task alone might miss.

Unified approaches reduce the overhead of managing many separate models. This can free up engineering and research effort to focus on one truly robust system instead of many specialized ones.

In practice, adding a task indicator or context flag helps a unified model know which mode it’s operating in, ensuring it still meets the specific needs of search vs recommendation where they differ (e.g., handling novel queries vs. filtering seen content for a user).

Multi-task learning is not without challenges - one must be careful to avoid negative transfer. But techniques like careful training schedule, separate encoder components, or larger model capacity can mitigate this.

In conclusion, unifying search and recommendation is a promising path toward smarter information systems. It aligns with the intuition that “if it’s good enough to recommend, it’s probably something that should be high in search results and vice versa.” Joint modeling forces our algorithms to confront that intuition and act on it, leading to richer user experiences. For scientists and engineers, it’s an exciting opportunity to break down silos between research areas and combine techniques from both fields. As our models become more capable and data-hungry, feeding them all available signals - and letting them figure out the best way to mix them - is a natural next step.

Happy ranking, whether by queries or by quirky user tastes!

If you find this post useful, I would appreciate if you cite it as:

@misc{verma2025joint-search-recsys,

title={Join Retrieval and Recommendation Modeling

year={2025},

url={\url{https://januverma.substack.com/p/join-retrieval-and-recommendation}},

note={Incomplete Distillation}

}